AI slop is coming for healthcare. Time to ask the uncomfortable questions

Search behaviour is shifting. Trust in healthacare is eroding. Patients are smarter than ever. In an age of AI slop, here are the questions I’d ask any healthtech startup.

I'm a fan of the dead internet theory: A flood of annoying AI content (=AI slop) will make social media (and the broader internet we know) an unbearable place. Instead, the internet will to 99% consist of bots selling stuff to each other. In a weird turn, real-life interaction might become more valuable again: We will go full circle.

Call me dramatic, but we are seeing early signs of it: A recent analysis found that 54% of long-form posts on LinkedIn are AI-generated. On Pinterest, it’s even worse - some users speak of 80% AI-generated photos on the platform. Youtube shows a similar trajectory, with 4 of the top 10 channels (by subscriber count) using AI-created videos in May 2025.

Why am I bringing this up as a healthtech VC?

Sure, healthcare feels like a lagging industry. But if trust, search behavior, and online content are changing this fast in consumer tech, healthcare will follow.

Today, I want to speculate on the downstream effects once all of it reaches healthcare. I’ll lay out three observations - and the questions I’d be asking our portfolio companies. Not because we have the answers, but because we’ll need to find them.

Observation 1: The route to content is changing

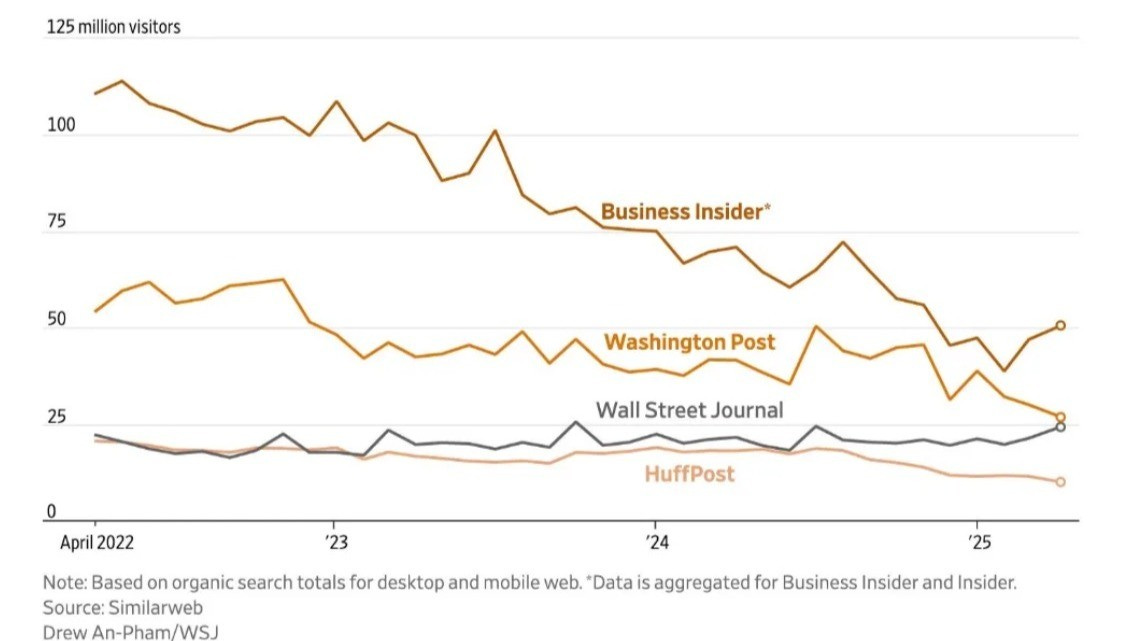

Google’s share of the search market has decreased, challenged by LLMs. In parallel, news sites have taken an even harder hit: People stop reading full articles and rely on AI overviews instead. I recently saw this chart, documenting a steep decline in news site visits - 50% decline for Business Insider over the past 3 years.

Are healthcare startups prepared for this shift? I would currently ask portfolio companies two questions:

Are you already shifting from SEO to GEO (Generative Engine Optimization)? We are hearing first pitches where healthtech founders explicitely pitch a GEO strategy.

Should you consider new customer acquisition channels as AI content renders online channels increasingly competitive? Anybody can create a Tik Tok video in seconds these days. Especially for high-value products, you could return to analogue channels such as in-person events or physical mailing campaigns.

Observation 2: Most new content is synthetic (and authenticity is gold)

You have all seen the ChatGPT-created posts on LinkedIn. Thousands of em-dashes and absurd “it’s not like this - it’s like that” statements. I personally skip these posts, I am looking for proprietary insights.

The commoditisation of AI content has led to an interesting shift: Authentic information and opinions are rising in value. Hand-written posts, authentic human videos and real personalities stand out these days.

This seems especially true in a trust-driven field like healthcare. As a consumer-facing healthcare startup, I’d ask myself:

How can I become the new, trusted face of healthcare throughout the AI content flood?

How can I shift towards real humans and authentic personalities to carry my message?

As AI content gets better, how will people differentiate between artificial and real content? Can this create an opening in the legacy system?

Observation 3: Patients will be smarter than doctors (and mistrust them?)

AI will change doctor-patient interaction in two ways, and both challenge their relationship:

On the one hand, we’ll see hyper-educated patients. Small history recap: The rise of Google already changed the doctor-patient dynamic dramatically. All of a sudden, patients showed up to appointments with their own diagnosis and increased (sometimes strange) expectations. The relationship became more eye-level. It’s a net positive development, but it introduces extra complexity that doctors struggle with. There are entire courses on this in medical school.

AI now supercharges this trend. With the current state of LLMs, any patient can be more informed than their doctor (on their specific case) with a bit of ChatGPT prep. A great win for patients - if LLMs are used correctly.

On the other hand, increasing AI content endangers trust in the conventional healthcare system. I’m worried that most healthcare providers don’t have a real online presence and will be outnumbered by trolls, activists and snake-oil-influencers. AI content outruns any fact checker.

We’re not in a great position to begin with: Under 40% of US citizens have “a lot of trust” in their healthcare system. First social media, then Covid and now AI content are deteriorating patients’ trust.

I am convinced we’ll need strategies to deal with the seismic shifts in trust and knowledge. Healthcare professionals will need more time and new commuication strategies to account for LLMs when dealing with patients. What I’d ask myself as a practitioner:

How can I teach patients to prompt LLMs to reduce misinformation?

How do I react to AI fakes the patients have seen?

How do I stay up to date on the latest LLM advances to become a better healthcare professional/organisation?

Bottom line: With 39% of young adults consuming news via TikTok and most content on online platforms being AI-generated, the dynamic between consumers and the healthcare system will change.

It will be a challenge - but also a rare chance for new businesses to earn their spot in a crusted system. Perhaps an authenticity/verification layer, a novel way to reach health consumers, or an entirely new healthcare brand?

If you’re building something along those lines, DM me.

Happy Monday,

Lucas