Should AI medical scribes be more than just OpenAI wrappers?

Investors, founders and healthcare providers are debating the tech depth of AI scribe startups. Let's discuss why (and where) a specialised transcription model matters.

After my recent posts about AI medical scribes, a common question in the comments was: How many of startups are just wrappers around OpenAI’s models? And isn’t that dangerous?

To dig deeper, I spoke with multiple scribe founders and consulted the Speechmatics team. They’ve been building AI transcription technology for over a decade and raised over $ 50m in funding.

Below is a breakdown of what I learned and why the real question isn’t “OpenAI vs Speechmatics” - but something more layered.

Disclaimer: Multiple components of an AI scribe tech stack use AI/machine learning models. Today, we focus on speech-to-text (STT) engines and automatic speech recognition (ASR) models, since it’s often considered the core of an ambient scribe. Besides, I’m obviously not an ML engineer, so it’s just a high-level investor view.

Why specialised ASR matters

Let’s say you’re a medical scribe founder and you’re about to choose a speech-to-text setup. You will need to decide on an automatic speech recognition (ASR) model and how to deploy it.

From what I’ve seen, you face 3 broad options (and many shades in between):

Generalist API: You buy API access to generalist models like Whisper (OpenAI) or Google STT. Someone else hosts and trains it, you use it when needed - the lazy but cheap and agile version.

Self-hosted model: Use an open-source ASR model and host it yourself - that could again be OpenAI’s Whisper. Needs more resources, but you control the model and can fine-tune it if you have the expertise.

Enterprise license: You choose a specialised, enterprise-grade ASR providers like Speechmatics. High quality, full service, multiple deployment options.

Another popular option is Deepgram, which I’d place somewhere between option 1 and 3. They’re an API solution but with specialised healthcare models.

So why not just go with OpenAI, a household name? In healthcare, there are good reasons for an enterprise-grade ASR license. Here’s how Speechmatics pitches it:

Accuracy from the ground up: General-purpose models like Whisper often predict what was likely said, not what was actually spoken. That distinction matters when one mistranscribed drug name can destroy an entire treatment plan.

Real-world training data: Speechmatics has trained on millions of hours of multilingual, accented, noisy speech. Important for clinical settings.

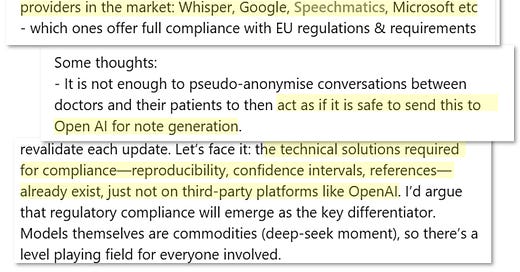

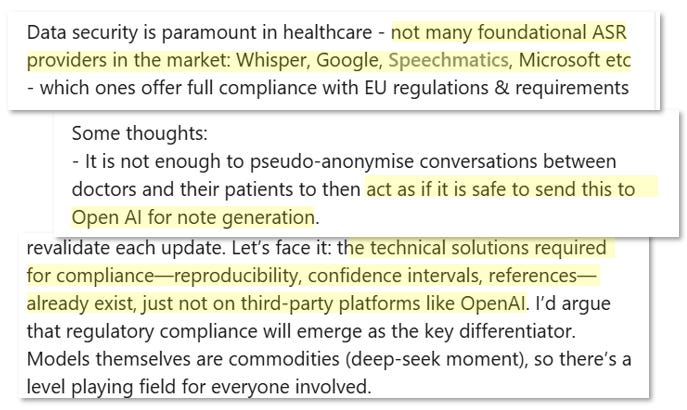

On-prem/on-device deployment: For EU healthtech startups navigating GDPR and national frameworks, pure cloud-deployment raises barriers. You often need to deploy locally to maintain control and calm your customers’ privacy concerns.

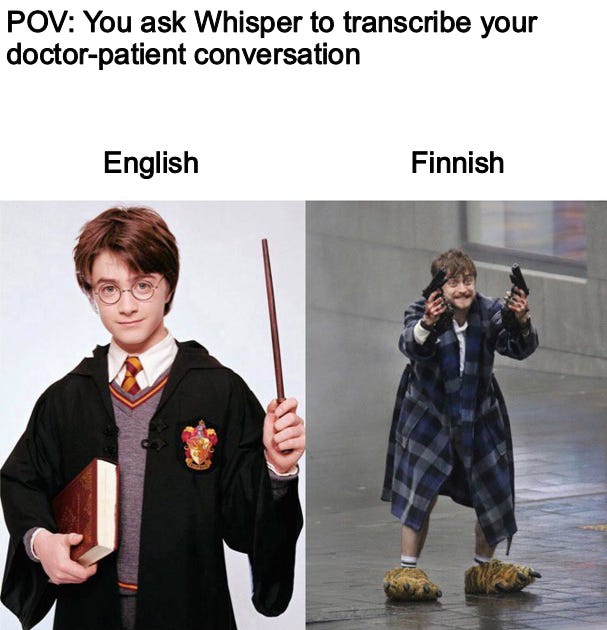

Language diversity: Most models are still English-centric. As a European VC, our investment area spans 24 official and approximately 280 total languages - this is a real concern. For example, Whisper still scores a ~24% word error rate (WER) in Finnish and 30% in Icelandic in common benchmarks.

Here’s a (shortened) snapshot from their Github page:As you can imagine, any WER over 25% becomes unusable. Meaning for many languages, Whisper is not a feasible off-the-shelf solution.

The counter-bet: Stay lean or build deep

Despite the obvious benefits of specialised ASR providers, we often see startups using generalist alternatives. I’ve noticed two clusters of startups insisting to use Whisper & co:

One type of startup will just say Whisper is “good enough” - especially in English-speaking markets. In fact, multiple founders told me transcription accuracy is not their main concern - the impact of higher accuracy on their overall business is negligible. Regarding compliance, they are willing to work in grey zones to achieve a lean go-to-market.

The other type of startup has the capacity to build a comprehensive in-house solution. They fine-tune for precision, host models locally and build pre-/post-processing steps around it for anonymisation and compliance. In other words: They don’t need a specialised ASR provider. Disclaimer: This takes significant resources and only makes sense if transcription is your core business offering.

Is it a risk tolerance game?

For founders I assume the question is not so much “Which ASR model is better?” but more “What’s my use case?”, “What risk am I willing to carry?” and “Which resources am I willing to build internally?”.

Often, the “OpenAI wrapper” discussion feels very binary. In reality, there are multiple routes to achieve an accurate, compliant and reliable scribe stack. It ranges from partnering with an enterprise license like Speechmatics to building a complex in-house setup with open-source tools and proprietary data.

Either way, startups need to account for varying national regulations and infrastructure constraints in healthcare. The ability to control where and how transcription happens is a prerequisite for scale and trust.

Conclusion: As a VC, it’s almost impossible to understand and weigh all technical details in real-time. Instead, I am looking for stack-aware teams - those who can explain why and how they balance safety, quality and agility in their scribe stack.

Happy Monday,

Lucas