ElevenLabs released a scribe. Will it impact medical AI note takers?

ElevenLab's new ScribeV1 beats all established transcription solutions. I tested it in patient-doctor conversations.

Last Thursday, famous AI company ElevenLabs ($ 180m Series C by A16z in January 2025) released an AI scribe tool: “Scribe V1”. What stood out was the scribe’s performance, establishing it as the most accurate scribe ever!

Where does scribe accuracy matter most? Exactly: In high-stakes settings like healthcare. Many VCs have bet money on healthcare-specific scribes already (Nabla, Tandem, Autoscriber, Voize, … just to name European ones), making it one of the hottest healthtech markets of 2024.

Does Scribe V1 change the market dynamic? Is it even good at healthcare? I put it to the test.

What we know about Scribe V1

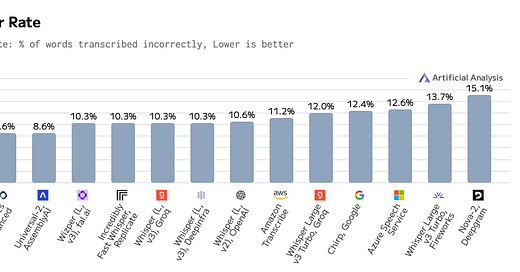

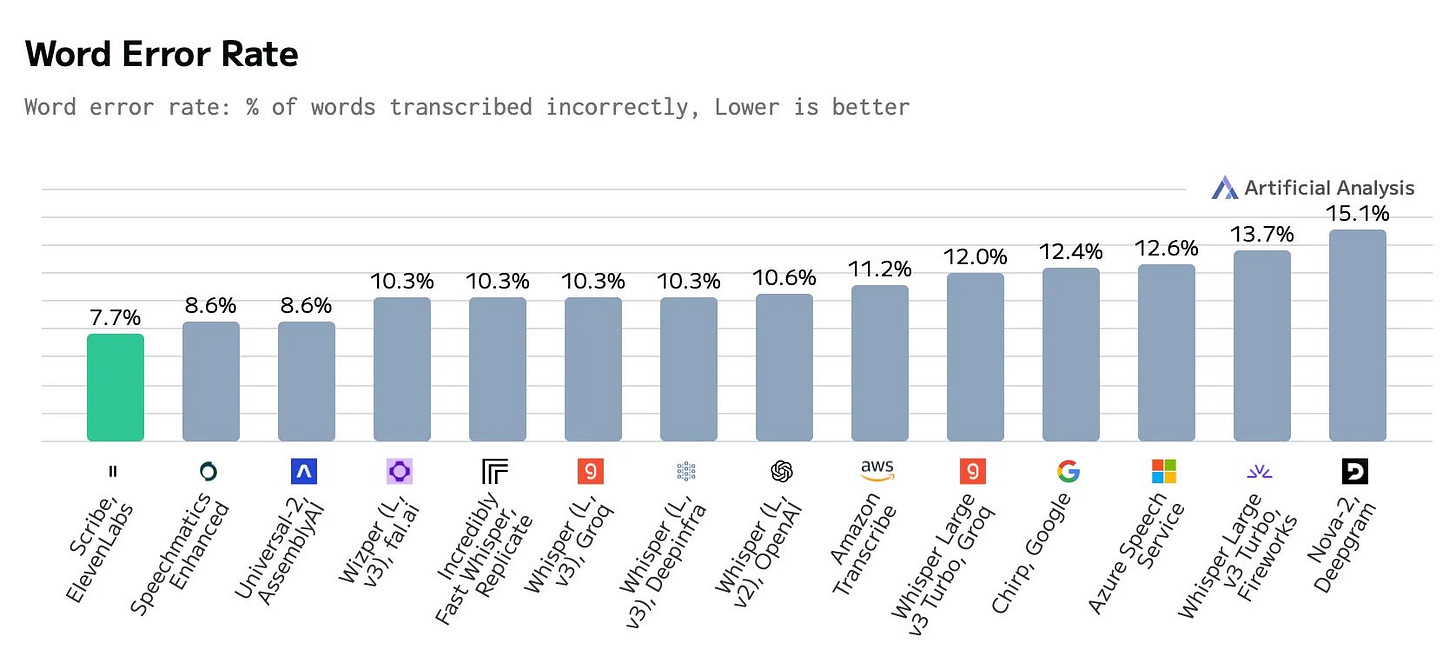

First, let’s set the scene on V1’s exceptional performance: In independent benchmarks, it achieved a lower word error rate (WER) than all established tools like OpenAI’s Whisper or Amazon’s and Google’s offerings. FYI: The WER counts the amount of words the tool messes up in a standardized test sample.

Source: Artificial Analysis’ benchmarks

Interestingly, Elevenlabs charges an acceptable price of $ 6.7 per audio minute vs $ 16-24 for Amazon, Google and other common providers. It also responds at reasonable transcription speed of 41 audio seconds per second - not best-in-class but below most competitors.

Does that translate to medical settings?

It’s important to ask whether a generalist scribe tool performs well in specialized settings such as medical conversations. It might lack the fine-tuning for technical terms and abbreviations, perhaps even for context-specific background noise.

Until today, Scribe V1 was only tested on broad benchmarks such as FLEURS or Common Voice. To assess medical performance, you’d have to run it on healthcare-specific benchmarks like the BERT Score.

I’d love to do that personally, but our unpaid intern recently quit and I have no capacity to run large-scale benchmarks next to my stressful daily coffee chats…

Jokes aside, I ran a quick experiment using the following setting:

3 simulated doctor-patient conversations, male and female voices

little to no background noise

13:47 min audio in total, 20.855 words

2 languages (English, German)

intentionally high load of technical terms and abbreviations (“idiopathic”, “undulating”, “McCullen”, “tacrolimus”, “ERCP”, “ÖGD”…)

intentionally high load of numeric values (“38.5 degrees”, “120mg”, “130/70”, …)

To make it tangible, here are technical terms included in the audio:

The result: 17 word errors across all audio - a 0.1% error rate. Seems like I went too soft on Scribe V1… Interestingly, none of the mistakes were made on a technical medical term, and no patient would have been harmed.

Our test is too short to conclude much and we lack a control group, but on a qualitative level I am impressed. Scribe V1 catches complex terminology and beats any meeting note taker I have tested before.

Why Scribe V1 should matter

From first principles, a best-in-class word error rate should be one of medical scribe startups’ highest concerns. Most arguments against LLM-based scribes in healthcare revolve around their potential to produce false information, endangering patients down the line.

There were multiple reports about OpenAI’s Whisper (used by Nabla for example) producing dangerously high levels of misinformation. Most shocking was it’s tendency to hallucinate, as reported in this paper. Some immediately called for regulation.

Consequently, shouldn’t the most precise tool - now Scribe V1 - win the market?

Why it doesn’t matter - in reality

In real-life context, Scribe V1’s magic accuracy won’t move the needle. I spoke with several medical scribe founders over the past days, none of them will switch their transcription tech stack to ElevenLabs.

Here’s why:

The accuracy increase is too small. While technically impressive, customers likely won’t notice the improved error rate.

They have larger problems elsewhere. Adding features to the product and propping up the sales team are 10x more impactful for their startup’s success.

Switching costs are decreasing, but not zero. Any switch to another transcription provider will cause friction.

Word error rate is not the only determinant for medical scribe quality. It’s a key component, but healthcare-specific finetuning, microphone setup and summarisation capability matter more.

What’s next?

Even if ScribeV1 isn’t a major breakthrough, I am 100% confident that we’ll solve medical scribe’s remaining accuracy issues soon. Adoption is increasing already and multiple European medical scribe startups have passed the € 1m ARR threshold.

Heal Capital hasn’t invested in the field yet… should we reconsider?

Happy Monday,

Lucas